Project Lead

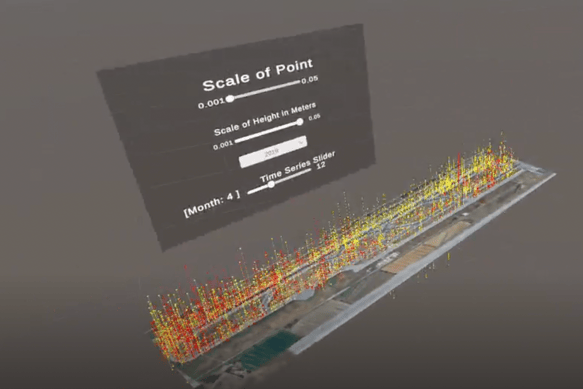

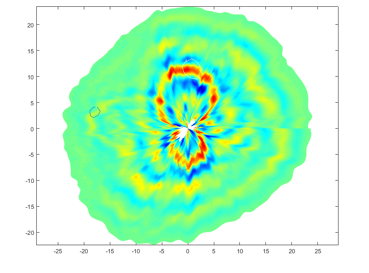

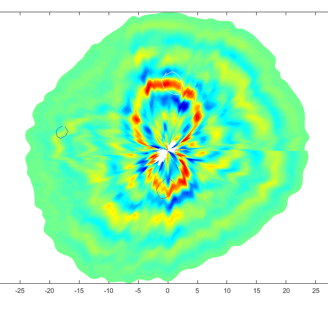

Immersive Runway Monitoring

A project investigating the visualisation of Satellite-based Interferometric Synthetic-Aperture Radar (InSAR) data in Virtual Reality (VR). A prototype was developed that allowed key stakeholders to visualise historical runway deformations using a time-series analysis. This project has implications for ensuring runway safety and enhancing the decision-making process regarding runway management.

University of West London

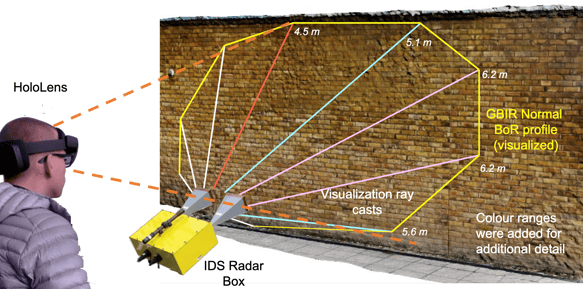

Monitoring Bridge Structures in MR

Continual monitoring of civil structures is essential to maintenance and ensuring safety and integrity. We developed a Mixed Reality application on the Microsoft HoloLens 2 that allows stakeholders to visualise the beam of radiation emitted from a GBIR radar system. The system can be used effectively in outdoor locations, which can be challenging for infrared-based depth mapping technology. This project delivered a relatively low-cost structural monitoring and assessment solution, which allows surveyors to accurately visualise areas of interest, thereby streamlining the decision-making process regarding maintenance of crucial civil structures, e.g., walls and bridges.

University of West London

Paper 1

EGU General Assembly:

Paper 2

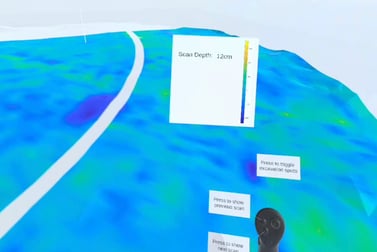

Immersive Digital Twins of Tree Trunks

Detecting decay in tree trunks is essential in considering tree health and safety. Continual monitoring of tree trunks is possible using a digital model, which can contain incremental assessment data on tree health. This work investigates the design and implementation of a smartphone app for scanning tree trunks to generate a 3D digital model for later visualisation and assessment. With this Mixed Reality app, a digital twin of a tree can be developed and maintained by continually updating data on its dimensions and internal state of decay using Ground Penetrating Radar (GPR).

University of West London

EGU Gen Assembly:

Paper 3

SPIE Optical Metrology Symposium:

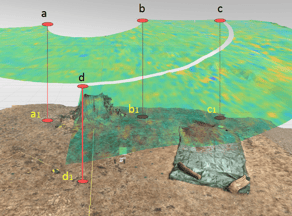

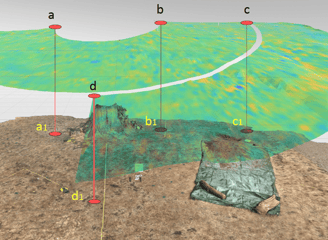

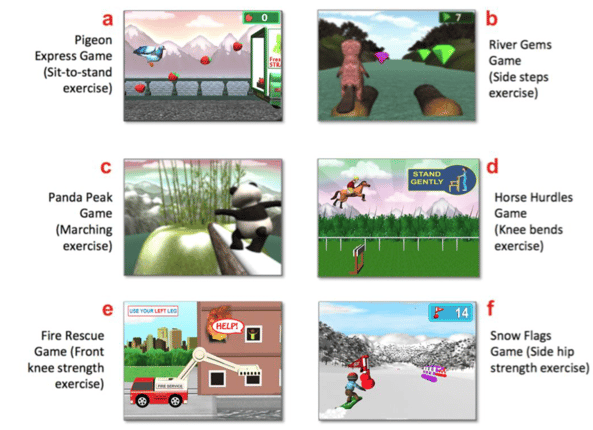

Monitoring Tree Roots and Health in VR

Non-destructive techniques, for instance, laser scanning, acoustics, and Ground Penetrating Radar (GPR) have been used in the past to study both the external and internal physical dimensions of objects and structures, including trees. We developed a Virtual Reality (VR) system using smartphone-based LiDAR and GPR data to capture ground surface and subsurface information to monitor the location of tree roots. The system can provide a relatively low-cost environmental modelling and assessment solution, which will allow researchers and environmental professionals to a) create digital 3D snapshots of a physical site for later assessment, b) track positional data on existing tree roots, and c) inform the decision-making process regarding locations for potential future excavations.

University of West London

EGU General Assembly:

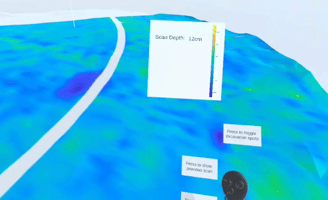

Crossing-based Interactions in XR

A project investigating the design of crossing-based interactions in spatial environments, e.g., Extended Reality (XR). Virtual-hand-based and raycast-pointing-based crossing methods were compared to Gaze-based selection on the Microsoft HoloLens. A series of user studies showed that hand-based methods are more natural and intuitive than gaze-based selection and can be just as efficient, as long as interactions are short. This work offers an alternative enjoyable method for interacting in XR spaces.

University of Cambridge

ACM ToCHI:

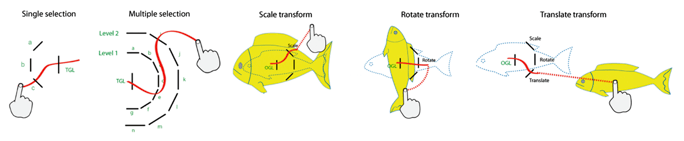

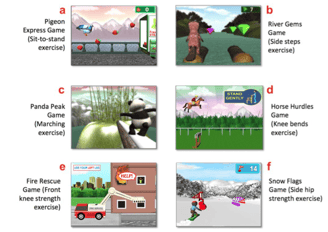

Serious Games for Elderly Rehabilitation

A project investigating the use of tailored serious games to enhance physical rehabilitation (exercise) for older adults who are at risk of falling. A requirements gathering process was conducted to elicit stakeholder needs regarding effective therapy and to co-design games driven by body motion and based on established home therapy routines. A pilot-randomised controlled trial showed that the games promoted consistent exercise and led to improvements in dynamic balance and muscle strength, thereby reducing fall risk in older adults.

Heriot-Watt University

ACM ToCHI:

ACM CHI:

ACM IMWUT:

Supported projects (Co-Lead)

Target Acquisition in 3D Motion-in-Depth (Spatial User Interfaces)

In this research study, we conducted two studies investigating how various factors including texture, shadow, alignment, moving speed and moving direction affect user perception in 3D motion-in-depth in Virtual Reality environments.

University of Cambridge

International Journal of Human Computer Studies (IJHCS):

AIMS: A Mobile Reminder for Stroke Rehabilitation

In this project, we applied a user-centred design process to create the Aide-Memoire Stroke (AIMS) App, which helps Stroke survivors remember to exercise more frequently.

Heriot-Watt University

ACM Mobile HCI:

An Interactive System for Age-Related Musculoskeletal Rehabilitation

In this project, we developed an interactive visualisation tool to aid users who have had total knee arthroplasty. Using wireless inertial sensors to capture lower limb movement, the tool allowed users to visualise their knee motion and effectively adhere to therapist recommendations, leading to improved recovery outcomes.

Glasgow Caledonian University

INTERACT:

Designing a Visualisation Tool to Support Physical Rehabilitation

In this project, we developed an interactive visualisation tool to aid users across a variety of biomechanical use cases, for instance, Stroke, Falls, and Knee Arthroplasty.

Glasgow Caledonian University

Health Informatics:

Location-Based Games on Mobile Devices

In this project, we designed two location-based games, which used RFID and QR codes to guide users to contextual activitites, for example, users went to a physical gym to train their characters. Games such as these were early concepts that led to later GPS-based location games, such as Pokemon Go.

Glasgow Caledonian University

Personal and Ubiquitous Computing:

Multimodel User Interfaces:

© 2025. All rights reserved.